Robot readers are stealing your ad money and poisoning your data. Discover why traditional defenses fail and what actually works.

Key takeaways

- AI-generated traffic now mimics humans perfectly, quietly poisoning the data your entire business relies on.

- Optimizing for fake AI engagement destroys advertiser trust and permanently drives revenue to walled gardens.

- Reactive defense is dead; only real-time behavioural analysis within your DSP can stop today’s AI bots.

It’s 2026, and traffic numbers have never been higher. As adtech experts, we’ve witnessed pageviews skyrocket over the past few years. At first glance, it might seem obvious and logical. The internet continues to reach more people; users spend more time scrolling, and finding information has never been easier.

However, there’s another number quietly breaking records: the invalid traffic rate (IVT).

According to a report from DoubleVerify Fraud Lab from last year, general invalid traffic volumes increased by roughly 86% year over year in the second half of 2024.

Why does this matter, and what does it mean for publishers? Let’s dive into it.

First, let’s remember what exactly IVT is: Invalid traffic (IVT) refers to any impression, click, or pageview that doesn’t come from a real human being. It includes bots, crawlers, and automated systems that imitate user activity. Basically, traffic that looks good on paper but brings no real value.

In the past, IVT mostly came from basic bots: repetitive scripts crawling websites, easy to spot and filter.

Unfortunately, that’s not the reality anymore. In 2025, the real enemy isn’t the old-school bot; it’s AI-generated traffic.

AI-driven systems now simulate human behaviour with uncanny precision: they scroll, pause, “read,” and even move the mouse as a person would. Some are tied to AI content farms or automated browsing tools that create fake engagement loops: page visits to other AI-made pages in endless circles.

The result? A perfectly normal-looking spike in analytics that’s completely synthetic.

Subscribe to our newsletter for more publisher stories and strategies straight into your inbox.

Related articles

A growing problem for publishers

This new generation of IVT is far more dangerous because it pollutes data at scale: the numbers still look healthy, but the foundation underneath is compromised.

AI-generated invalid traffic doesn’t just inflate metrics; it corrupts the quality of information that publishers, advertisers, and platforms rely on to make decisions.

It blends synthetic behaviour with real user signals so effectively that even advanced detection systems struggle to tell them apart. Scrolls, clicks, and session times look authentic, but they’re often simulated by algorithms trained to mimic human behaviour down to the millisecond.

This means audience data is being quietly corrupted. Engagement rates become unreliable, and metrics that once reflected real interest are now padded with empty interactions.

Articles may appear to retain readers longer, but in reality, many of those “readers” are AI scripts generating synthetic attention.

Audience segments that once offered precise targeting become contaminated by fake profiles, skewing advertiser performance and making campaigns appear less effective than they truly are.

For publishers, the financial consequences are serious. At first, inflated numbers might look like growth, but over time, advertisers notice that conversions don’t follow, that cost-per-acquisition rises, and that inventory from certain domains or supply paths underperforms.

When that happens, trust collapses. Buyers start lowering bids, pulling budgets, or moving to safer, cleaner environments. CPMs drop, and recovering that lost confidence can take months or even years.

It doesn’t stop there. Yield optimization systems that rely on engagement metrics end up prioritizing the wrong inventory, essentially training algorithms on poisoned data.

The more publishers optimize toward what looks successful, the deeper they drift into inefficiency, monetizing fake volume at the expense of real value. This creates a vicious cycle where poor data drives poor optimization, which in turn attracts more low-quality traffic.

What used to be a technical nuisance has become a real structural threat. It’s no longer about cleaning a few bad impressions; it’s about preserving the integrity of the entire digital advertising ecosystem.

AI-generated IVT undermines the relationship between publishers and advertisers at its core. If buyers cannot trust the metrics they see, they’ll stop trusting the inventory itself.

And when that happens, money flows toward walled gardens or direct deals that can guarantee verified traffic.

AI-generated image by Gemini

So how can publishers actually tell when something’s off?

The truth is, AI-generated traffic rarely looks like a sudden spike or an obvious anomaly. It’s a slow distortion. The dashboards still show healthy trends, but the behaviour beneath starts to shift in ways that don’t make sense.

Session durations increase unnaturally, pages per session rise, bounce rates fall, yet conversions and engagement don’t move. Advertisers might start asking questions about performance drops even though your analytics show record reach.

That gap, between what data says and what results show, is where synthetic traffic hides.

Some of the signs are subtle. You might see an unexpected surge from countries that have never been core to your audience.

You might notice traffic peaks at odd hours, perfectly spaced and recurring with robotic consistency.

Or you might see a growing mismatch between your ad server impressions and your on-page analytics, meaning something, somewhere, is triggering calls that no real user made.

These are the most common red flags. But the difficulty today is that AI-generated invalid traffic doesn’t behave like old-school bots.

It doesn’t hammer your site with the same IP, it doesn’t sit idle, and it doesn’t refresh endlessly. It learns. It observes your site’s structure, mimics natural user patterns, simulates scrolls, clicks, pauses, and even reading speed.

It knows how to look real because it’s built by systems that were trained on real human behaviour.

That’s why traditional protection layers are falling behind. Those methods were designed for the internet of 2015, not the algorithmic complexity of 2025.

By the time a quarterly audit detects a pattern, the AI system has already evolved and adapted. The clean-up comes too late; the data is already polluted, and the trust has already decayed.

Related articles

How can publishers counter invalid traffic?

To counter that, publishers need protection that works in real time, not as an afterthought.

This is where the right demand-side platform (DSP) becomes more than a monetization partner; it becomes part of the publisher’s defense system.

A DSP that owns its entire technology stack can do what resellers and outsourced networks simply can’t. It can analyze behaviour as it happens, not days later.

It can correlate bid requests, impression logs, and engagement signals instantly to identify patterns no human eye would ever catch.

Because it’s built on proprietary infrastructure, it doesn’t rely on third-party APIs or generalized blacklists; it learns directly from the ecosystem it operates in.

When detection is embedded into the bidding logic itself, invalid traffic rarely gets the chance to enter the system. That’s the difference between reactive fraud detection and proactive protection.

For publishers, that means cleaner auctions, higher buyer confidence, and stronger long-term yield stability.

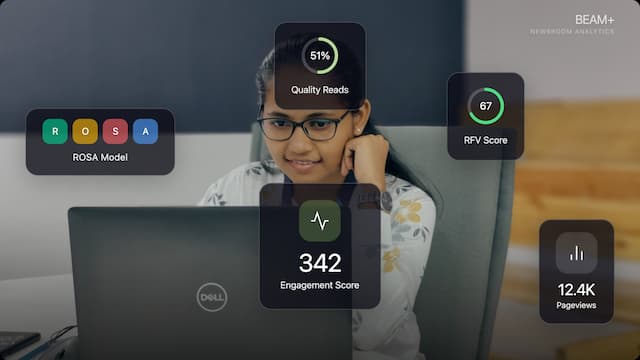

But beyond detection, the right DSP helps publishers see what’s actually happening beneath the surface.

Go beyond

At FatChilli, for example, we go beyond the surface and help publishers really understand what’s behind the numbers and what’s holding them back when it comes to unlocking their potential. Tools like ProTag have helped thousands of publishers fine-tune their ad setup and grow revenue. Find out more.

It provides transparency, real data, and clear reports. It helps identify which sources, formats, or placements are more exposed to AI-generated IVT, and why. It gives publishers visibility into where their demand partners sit on the trust spectrum.

That visibility is power: it allows them to make smarter choices about traffic acquisition, inventory distribution, and audience development. This is exactly where DSPs with in-house technology have a structural advantage.

They aren’t bound by the limits of third-party verification tools that detect fraud only after damage is done. They can refine models daily, adapting to the same pace at which AI evolves.

For publishers, that means having a partner that not only delivers revenue, but protects the foundation that revenue depends on: credibility.

Every clean impression builds a case for long-term trust. Every verified click tells advertisers they’re investing in real audiences, not fabricated patterns.

And every partnership built on transparency strengthens the entire open web.

Now that bots can imitate humans and AI can generate infinite pageviews, authenticity has become the most valuable metric.

The publishers who thrive won’t be the ones with the biggest numbers, but the ones with the cleanest ones.

Enjoyed the post? Share it.

May is an ad-tech expert who uses her hands-on experience across the programmatic ecosystem to explain its real challenges, opportunities, and people through clear, practical, jargon-free insights.